Draw to Print

Web-based modeling tool for 3D-printing

Draw-to-Print challenges the conventional 3D modeling-to-printing pipeline, which relies on predefined workflows within established 3D modeling ecosystems. Traditional slicing software constrains the potential of printing, and widely used digital modeling methods often lack support for intuitive, arbitrary creation. These barriers limit the accessibility and creative freedom for users, especially those unfamiliar with traditional 3D modeling tools.

This project aims to develop a tool that allows users to directly and intuitively create print paths in 3D space without the constraints of conventional modeling paradigms. By removing unnecessary complexity, Draw-to-Print enables a more fluid and expressive approach to 3D printing, making it accessible to a broader range of creators.

Collaborator: Zhishen Chen

Instructor: Jose Luis Garcia del Castillo Lopez

April - May 2023

Harvard University Graduate School of Design

Problem Statements

How traditional 3D modeling to printing paradigm works?

The Conventional 3D Printing Workflow

The standard 3D printing workflow follows a structured pipeline: mesh modeling → slicer processing → G-code generation → printing. Each step is separate, reinforcing a segmented approach to creation.

The Slicer’s Role

and Its Disconnection

Dissociation in CAD and 3D Printing

The slicer process serves as an intermediary step that translates modeling data into printable data. However, this introduces a fundamental disconnect— the logic used for printing differs from the logic originally used to create the model.

General modeling software is used for object creation, while slicer tools are applied afterward to convert digital models into printable instructions. This results in a fragmented workflow, disrupting seamless design-to-print integration.

A major limitation of the slicer process becomes evident when attempting to print single-layer, non-planar PLA objects or arbitrary patterns. Traditional slicers are optimized for layered, planar slicing and often lack built-in support for such unconventional geometries, restricting creative and functional possibilities.

1

Can We Model The Printing Path Directly?

Imagine a tool that seamlessly bridges arbitrary modeling and 3D printing, translating creative input into printable output through an intuitive and hassle-free process.

Let's dive in deeper

What are the fundamental problems here?

Traditional 3D modeling is misaligned with additive manufacturing, which relies on printing paths in space. General slicing programs further constrain creative potential by enforcing rigid, layer-based workflows.

The fundamental question remains:

How can users intuitively create lines and curves in true 3D space using a 2D interface?

Drawing is one of the most intuitive forms of creation. However, on a simulated 2D screen, the limited depth perception of 3D space forces the arbitrary drawing process to be broken into two steps: drawing and adjusting.Before even addressing printing challenges, we first encounter a fundamental issue:

the unnatural fragmentation of freeform creation in 3D modeling.

The Simplicity of 2D Drawing

Drawing a 2D line—whether on paper or a digital canvas—has always been an effortless and natural action. However, when the experience extends into 3D, the interaction dynamics fundamentally change.

The Challenge of 3D Drawing on a 2D Screen

When using a computer (whether on a web interface or a 3D modeling tool), drawing a 3D line becomes significantly more challenging. Since the 3D environment is simulated on a 2D screen, users lose their natural spatial awareness, making arbitrary and intuitive 3D line creation difficult.

The Reliability of Real-World Drawing

In real space, drawing a 3D line—such as in a VR environment—feels intuitive because people inherently understand depth and spatial positioning. Their real-world cognition of 3D space allows them to place strokes naturally and fluidly.

The Limitations of Traditional 3D Modeling

In conventional 3D modeling tools, creating a 3D line typically requires clicking and dragging in a 2D perspective, followed by manual adjustments to vertices and properties. While effective for structured modeling, this process becomes inefficient for fluid, irregular, and arbitrary creations, limiting the spontaneity and expressiveness of 3D drawing.

2

How can we make 3D drawing

more intuitive on a 2D interface?

Regarding this topic, I have made a few attempts before this project.

(Click to check)

Roller Coaster, allows users to draw their own coaster path using a mouse. However, the depth of each point is randomly assigned, highlighting the same challenge: how to translate a 2D drawing into a 3D path—or more specifically, how to determine the Z-depth data. (2023)

Drawing in 3D is a web-based experiment where users draw in a 3D scene using a 2D cursor. The approach relies on an invisible (or visible) object in the scene as an intersection reference for ray-casting, providing a depth anchor for the mouse position. While this method doesn’t allow precise control over depth, it suggests that ray-casting could be a viable solution for depth perception in arbitrary 3D line drawing.(2022)

Design Challenges

We are able to separate the challenges into two topics:

Draw

1. How to get the depth or ‘Z’ orientation data when drawing with the 2D cursor?

2. How to let the user control the depth of their mouse pointer when drawing?

3. How to make users gain a space (depth) cognition when drawing in a 2D?

1. When transforming arbitrary drawing lines into printable sections without slicing, how should we refine the line mesh in a way that the intuitionistic drawing model is furthest preserved but is also feasible? In other words, what are the necessary limitations/ features in the mesh refinement process?

Our Solution

How can we make 3D drawing

more intuitive on a 2D interface?

Our approach addresses the challenges of intuitive 3D drawing and seamless 3D printing by creating a workflow that connects arbitrary drawing in 3D space with precise model refinement and direct G-code generation.

For drawing, we utilize techniques like ray-casting and depth referencing to translate 2D inputs into coherent 3D paths, enhancing user cognition of depth and control over the Z-dimension.

For printing, we refine arbitrary paths into optimized models, ensuring feasibility while preserving the fluidity of the original design. The resulting G-code is directly exportable, enabling an integrated and efficient process from creation to 3D printing.

We provide users with a seamless journey, starting from intuitive arbitrary drawing in 3D space. This is enhanced through computational refinement, which optimizes their designs while maintaining the essence of their creativity. Users can loop back to refine or recreate their drawings as needed.With a single click, the journey continues effortlessly by exporting the design into G-code, ready for 3D printing without requiring any additional steps. This streamlined process makes creating and printing intuitive, efficient, and accessible.

Arbitrary Drawing

Refine model

Export G-code

3D Printing

Design Features

[0]

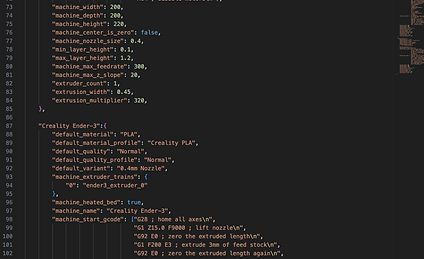

Construct a web app environment with node

[1]

Create a 3D modeling interface with THREE.js

[2]

Finding a way for drawing in 3D space

[2] Design Solution Specification:

Solving the arbitrary drawing problems

Our design solution sets surfaces as visual reference for users to perceive the relative position (depth). In the web app, there are 2 different orientation of surfaces, the users can:

1. Use the keyboard event (W, S key) to control the surface depth.

2. Use the cursor to click on the surface

3. The surface will detect the cursor by ray-casting and record the point position.

After clicking ENTER, the recorded points will construct a single line.

[3]

curve simplification

(Ramer-Douglas-Peucker Algorithm)

Because the user may draw thousands of points, to adapt the web app effeciency, we need to further simplify the line.

Fabian Hirschman

nhttps://github.com/fhirschmann/rdp/blob/master/docs/index.rst

[4]

Use the arbitrary drawing as the sections of modeling

[5]

Refine Section and Generate layers

[5] Design Solution Specification:

Invalid layer recognition and geometry refinement

Because of the 3D printing machine different structure limitations, some of the layers drawed by the user are invalid to be printed. Thus we need to design a mechanical system that detects whether the drawed layer is valid based on different pinters' parameters.

Some of the validate standard:

1. The closest and farthest points of the last layer and present layer. (This is relevent to the layer thickness of the printer & filament)

2. The curve height fluctuation. (This is relevent to the shape and dimension of the printer's sprayer)

3. The layer lateral displacement.

[6]

Complete model

Export G-code

[7]

Print

Printing Experiments

Whole process recording

Experience online

Draw to Print is now available online!

Althou some of the feature is under adjustment and construction, basic functions includes drawing, layer generating, g-code exporting are accessible.

Some operation hints:

-Click "z" on keyboard to change y or z orientation drawing surfaces

-Click "w" or "s" to move the surface

-Click on the moving surface to draw

-Click "Enter" on the keyboard to finish the line

-Press "c" in order to change perspective to view your model

-Export G-code at the right-top bar

Further....

Draw to print as a conceptual demo has been the initial idea of a start-up now backed up by Autodesk. For detailed information, check: www.reer.co